Project FingerFly

Project abstract

Project FingerFly is a project that will detect a specific hand gesture, "hand gun" gesture. Using this gesture, and the movement of the hand we will be able to navigate the quadcopter in the space. The project uses the Intel RealSense SDK.

Introduction:

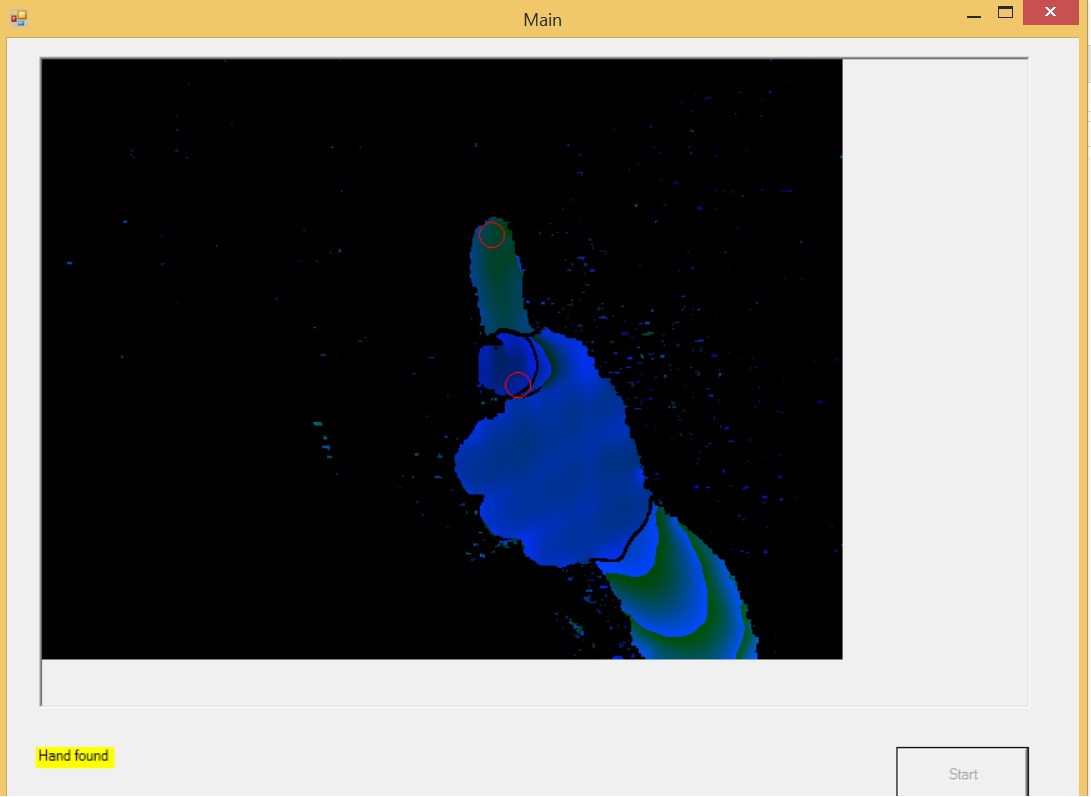

The application starts by first detecting the hand using the Intel Realsense hand recognition algorithm. Then, the algorithm detects the thumb finger and the index finger to use them for vector direction. The algorithm tries to eliminate other non "hand gun" hand gestures using only mathematical properties for this specific hand gesture.

*The application detecting the fingers.

How does it work ?

- Search for a hand using the Intel Realsense SDK

- Due to noisy output depth data, getting the tip of the index finger is impossible by searching the closest pixel to the camera. so to get over this obstacle, I built a depth histogram array containing pixels' (x,y,z).

- Find the first local maximum in the histogram array (closest pixels to the camera).

- Go over all pixels that have the depth we found ( they are contained in the histogram ) and find the average (x,y) to get an accurate location of the index finger.

- After we found the closest average point to the camera, we need to find the thumb ( the highest point in the frame that contains data).

- We assume these points we have found are the thumb and index finger. Now we need to check if they have the mathematical properties for the desired hand gesture.

Video

Source code

Run FingerFly.exe found in bin -> debug.

References

- Intel RealSense SDK